(Left) We introduce the task of novel view synthesis for LiDAR sensors. Given multiple LiDAR viewpoints of an object, novel LiDAR view synthesis aims to render a point cloud of the object from an arbitrary new viewpoint. (Right, Top) The mostly-closed related approaches to generating new LiDAR point clouds are some LiDAR simulators, which suffer from limited scalability and applicability, and fails to produce realistic LiDAR patterns. Furthermore, traditional NeRFs are not directly applicable to point clouds. (Right, Bottom) By contrast, we propose a novel differentiable framework, LiDAR-NeRF, with an associated neural radiance field, to avoid explicit 3D reconstruction and game engine usage. Our method enables end-to-end optimization and encompasses the 3D point attributes into the learnable field.

We introduce a new task, novel view synthesis for LiDAR sensors. While traditional model-based LiDAR simulators with style-transfer neural networks can be applied to render novel views, they fall short of producing accurate and realistic LiDAR patterns because the renderers rely on explicit 3D reconstruction and exploit game engines, that ignore important attributes of LiDAR points. We address this challenge by formulating, to the best of our knowledge, the first differentiable end-to-end LiDAR rendering framework, LiDAR-NeRF, leveraging a neural radiance field (NeRF) to facilitate the joint learning of geometry and the attributes of 3D points. However, simply employing NeRF cannot achieve satisfactory results, as it only focuses on learning individual pixels while ignoring local information, especially at low texture areas, resulting in poor geometry. To this end, we have taken steps to address this issue by introducing a structural regularization method to preserve local structural details. To evaluate the effectiveness of our approach, we establish an object-centric multi-view LiDAR dataset, dubbed NeRF-MVL. It contains observations of objects from 9 categories seen from 360-degree viewpoints captured with multiple LiDAR sensors. Our extensive experiments on the scene-level KITTI-360 dataset, and on our object-level NeRF-MVL show that our LiDAR-NeRF surpasses the model-based algorithms significantly.

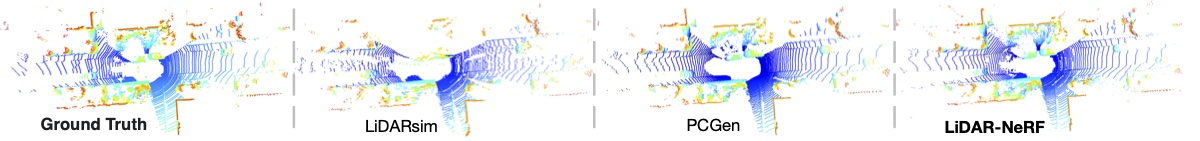

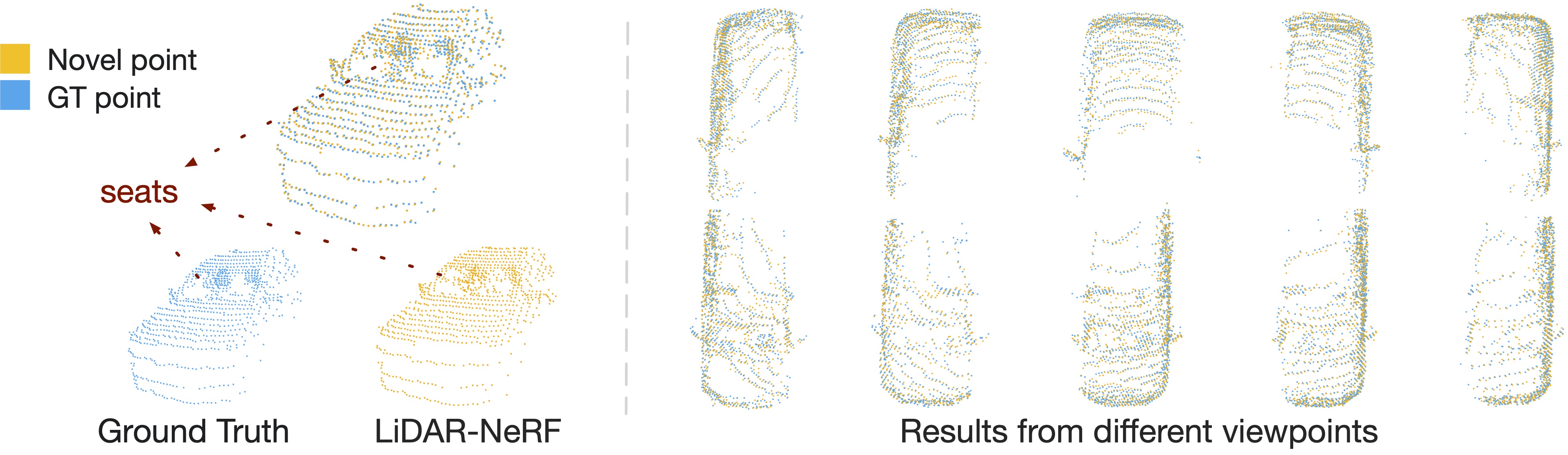

A comparison of novel view LiDAR point clouds generated from LiDARsim, PCGen, and our LiDAR-NeRF. LiDARsim suffers from inaccuracies in explicit 3D mesh recon- struction. PCGen overestimates object surfaces. Specifically, laser beams emitted by the LiDAR sensor can be influenced by surface material and normal direction, resulting in some beams pene- trating car glass and reaching the seats (car1 and car2), while others are lost (car3). Although an additional style-transfer net can alleviate the problem of beam loss, it does not take into account spe- cial attributes like the transmission. As opposed to prior arts, our proposed method, LiDAR-NeRF, effectively encodes 3D information and multiple attributes, achieving high fidelity with ground truth.

(a) (Top) The physical model of a LiDAR can be described as follow: each laser beam originates from the sensor origin and shoot outwards to a point in the real world or vanishes. One common pattern of laser beams is spinning in a 360-degree fashion. (Bottom) We convert the point clouds into a range image, where each pixel corresponds to a laser beam. Note that we highlight one object in the different views to facilitate the visualization. (b) Taking multi-view LiDAR range im- ages with associated sensor poses as input, our model produces 3D representations of the distance, the intensity, and the ray-drop probability at each pseudo-pixel. We exploit multi-view consistency of the 3D scene to help our network produce accurate geometry. (c) The physical nature of LiDAR models results in point clouds exhibiting recognizable patterns, such as the ground primarily ap- pearing as continuous straight lines. This pattern is also evident in the transformed range images, which display significant structural features, such as their horizontal gradient being almost zero in flat areas. As a result, these structural characteristics are essential for the network to learn.

(a) We design two square paths of collection, small and large with 7 and 15 meters in length respectively. (b) Our NeRF-MVL dataset encompasses 9 objects from common traffic categories. We align multiple frames here for better visualization.

Our LiDAR-NeRF produces more realistic LiDAR patterns with highly detailed structure and geometry.

LiDAR-NeRF can effectively encode 3D information and multiple attributes, enabling it to accurately model the behavior of beams as they penetrate car glass and reach seats. Moreover, the high quality of the results obtained from different viewpoints serves as compelling evidence of our method’s effectiveness

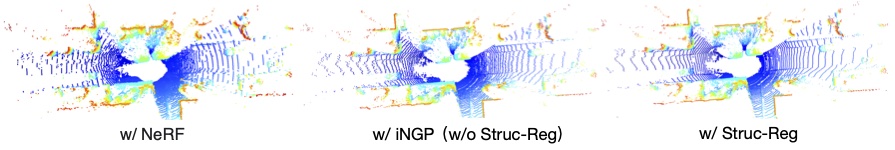

The iNGP’s hybrid 3D grid archi- tecture achieves more detailed structures. Our structural regularization significantly improves the geometry estimation and produces more realistic LiDAR patterns.

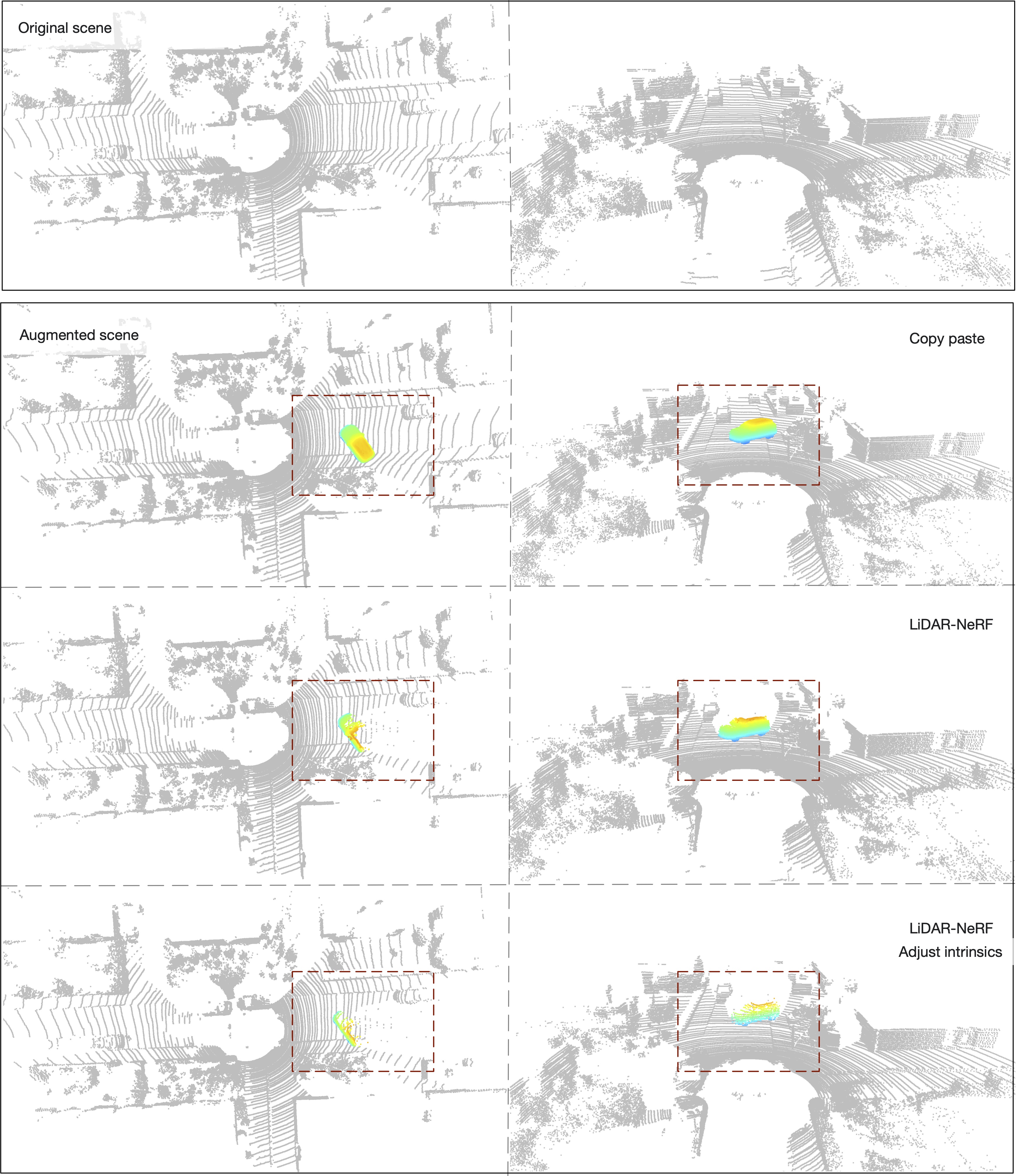

The augmented scene from our LiDAR-NeRF has realistic occlusion effects and consistent LiDAR pattern thanks to our pseudo range-image formalism, compared with the common cope-paste strategy.

@article{tao2023lidar,

title={LiDAR-NeRF: Novel LiDAR View Synthesis via Neural Radiance Fields},

author={Tao, Tang and Gao, Longfei and Wang, Guangrun and Lao, Yixing and Chen, Peng and Zhao hengshuang and Hao, Dayang and Liang, Xiaodan and Salzmann, Mathieu and Yu, Kaicheng},

journal={arXiv preprint arXiv:2304.10406},

year={2023}

}